Did you know the Loihi 2 chip has 1 million “neurons”? Meanwhile, the human brain has about 100 billion neurons. This shows how powerful neuromorphic computing can be. It’s a field that tries to make computers work like our brains.

Neuromorphic computing started in the late 20th century. It really took off in the 1980s with Carver Mead’s idea. This tech could change how we think about computing and AI. But, it needs new ways to program and better hardware to work right.

Quantum computing is another new tech. But, it needs even colder temperatures and lots of power. Researchers are focusing on neuromorphic computing. They want to make computers that can think like us, especially with tricky problems.

Key Takeaways

- Neuromorphic computing aims to replicate the human brain’s architecture in computer engineering, representing a shift from traditional computing methods.

- The Loihi 2 chip, a powerful neuromorphic chip, currently boasts 1 million “neurons,” while the human brain has approximately 100 billion neurons.

- One of the key challenges of neuromorphic devices is the need for new programming languages, frameworks, and more robust hardware to align with the new architecture.

- Neuromorphic computing promises to redefine computing, AI, and our understanding of human cognition.

- Engineers and researchers are working to replicate the brain’s functionality to advance AI goals, particularly in probabilistic computing and processing ambiguous information.

Understanding Brain-Inspired Computing Architecture

The human brain has 86 billion neurons and trillions of synapses. It’s the model for neuromorphic engineering, aiming to create computing that works like the brain. These systems, called neuromorphic systems, mix memory and processing for better data handling.

From Biology to Silicon: The Neural Connection

Scientists are turning the brain’s structure into silicon chips. IBM’s TrueNorth chip has one million neurons and 256 million synapses, like the brain. Intel’s Loihi system uses much less energy than old processors for some tasks, showing bio-inspired architectures work well.

Core Components of Neuromorphic Systems

Neuromorphic systems have key parts that copy the brain’s neural coding. They use analog circuits, spiking neural networks, and in-memory computing. Soon, a brain-inspired computer will hit the market.

Biomimetic Design Principles

Designing neuromorphic systems follows biomimicry principles. Engineers aim to copy the brain’s energy-saving ways. This includes in-memory computing, where memory and processing are together on a chip. IBM’s NorthPole and Hermes chips use phase-change memory, showing this approach.

The field of neuromorphic engineering is growing. It promises to open new areas in artificial intelligence and energy-saving data processing.

The Evolution of Computing: Beyond von Neumann

Traditional computing, based on the von Neumann model, has been the norm for a long time. But, as we need more efficient and smart processing, its limits are clear. Neuromorphic chip, inspired by the brain, offers a new way to process information.

In-memory computing uses new memory tech like phase-change memory (PCM) and resistive random-access memory (RRAM). It makes computing simpler and saves energy by cutting down on data movement. Also, nanomaterials like 2D materials are being studied for neuromorphic systems. They could make these systems more scalable and efficient.

Neuromorphic computing changes how we think about processing information. It combines memory and processing, solving problems traditional AI faces. The growth of deep learning and algorithms has also helped neuromorphic computing. “Spikes” – short pulses – are key in transmitting information between neurons.

Neuromorphic sensors could lead to artificial general intelligence (AGI), like human intelligence. It’s becoming more popular in fields like speech and image recognition, and in areas like autonomous vehicles and medical devices.

| Neuromorphic Computing Projects | Key Statistics |

|---|---|

| BrainScaleS | 384 chips per 20-cm-diameter silicon wafer, each chip implements 128,000 synapses and up to 512 spiking neurons, resulting in around 200,000 neurons and 49 million synapses per wafer. |

| SpiNNaker | 57,600 identical 18-core processors, totaling 1,036,800 ARM968 cores, and is capable of simulating one billion neurons in real-time with more than one million cores. |

| TrueNorth | 4,096 hardware cores, simulating a total of just over a million neurons, and over 268 million programmable synapses. Consumes merely 70 milliwatts and is capable of 46 billion synaptic operations per second, per watt. |

As we delve deeper into neuromorphic computing, its promise grows. It’s a step towards more efficient, low-power, and brain-like processing. This shift in computing architecture is a big leap towards advanced artificial intelligence and changing the digital world.

Neuromorphic Computing: Bridging AI and Brain Science

Spiking neural networks are at the heart of neuromorphic computing. They work like the brain, sending information quickly and efficiently. Researchers at IIT-Delhi created the DEXAT model. It uses tiny memory devices to improve spiking neuron performance, showing promise for tasks like voice recognition.

Neuromorphic systems are great at doing many things at once and learning in real-time. This makes them more flexible and efficient than traditional AI.

Artificial Neural Networks vs. Spiking Neural Networks

Artificial neural networks are the base of traditional AI. They send information continuously. On the other hand, spiking neural networks, key to neuromorphic computing, send information in bursts, like the brain.

This way of processing information lets neuromorphic systems tackle complex tasks better than digital computers. They’re good at recognizing patterns and handling sensory data.

Event-Based Processing Mechanisms

Neuromorphic systems use analogue circuits to mimic biological signals. This approach means only a few neurons are active at a time, saving energy. They can have millions of neurons and synapses working together, enabling fast and efficient processing.

Neuromorphic computing shows great promise in bridging AI and brain science. It could lead to more advanced computing for many fields, from medicine to robotics. As it grows, combining neuromorphic methods with new technologies could change computing forever.

Key Technologies Enabling Neuromorphic Hardware

The future of AI processing is changing with neuromorphic computing. Companies like Intel, IBM, and Qualcomm are leading the way. They’re making chips that work like the brain, using new technologies to solve AI problems better.

These chips act like neurons and connect through artificial synapses. This lets them compute quickly and learn in real-time. They promise to make computing faster, cheaper, and more energy-efficient than old chips.

Memristor technology is a key driver in neuromorphic hardware. Memristors remember their state even when power is off. By adding memristors to chips, researchers can create artificial synapses that learn and adapt like the brain.

This mix of memristor tech and neuromorphic design could change AI processing. It could make computing systems more efficient and adaptable.

Neuromorphic hardware is also very energy-efficient and fast. It works well even when it’s cold, making it perfect for devices that run on batteries and edge computing. Research on new materials is also improving neuromorphic computing.

As neuromorphic engineering advances, it’s changing AI and computing. It’s opening up new areas like machine vision and natural language processing. The Netherlands is leading in this field, thanks to its strong AI and neuroscience research.

The mix of neuromorphic chips and memristor tech is creating a new AI processing era. This new era is more efficient, adaptable, and scalable than before. The possibilities for these brain-inspired systems are endless, from edge computing to healthcare.

| Technology | Key Features | Advantages |

|---|---|---|

| Neuromorphic Chips |

|

|

| Memristor Technology |

|

|

The blend of neuromorphic chips and memristor tech is set to change AI processing. It offers a more energy-efficient, adaptable, and scalable option than traditional computing. As we continue to explore neuromorphic engineering, the uses for these systems will grow. They could be in edge computing, autonomous vehicles, healthcare, and natural language processing.

Power Efficiency and Performance Benefits

Neuromorphic computing systems are a big leap in energy use and speed compared to old AI ways. They use less energy for big deep learning tasks than other AI systems. A big neuronal network on neuromorphic hardware uses much less energy than other hardware, saving two to three times more than other AI models.

The human brain, with its hundred billion neurons, uses about 20 watts, just like an energy-saving light bulb.

Energy Consumption Comparison

Neuromorphic computing is efficient because it only works when needed. The new Loihi generation will make it even more efficient, improving chip-to-chip communication. One chip uses 1000 times less energy than chip-to-chip communication because it doesn’t use action potentials.

Processing Speed Advantages

Neuromorphic systems are also super fast. IBM’s TrueNorth chip can classify images at 1,200 to 2,600 frames per second, using only 25-275 milliwatts of power. This is 6,000 frames per second per watt, way better than graphics processing units.

This efficiency in energy use and speed makes neuromorphic computing a big deal in low-power AI and asynchronous information processing.

Applications in Real-Time AI Processing

Neuromorphic computing is great for tasks like image recognition and speech perception. It’s key for cybersecurity, health monitoring, and self-driving cars. Devices can work smartly offline, which is good for IoT and wearables. By 2025, smartphones might have these chips, making them super smart.

Neuromorphic systems are used in robotics and AI, improving pattern recognition and decision making. Intel, IBM, and Qualcomm are leading in this field. Places like Caltech, MIT, and Stanford are also pushing the boundaries.

Neuromorphic computing could make robots more autonomous and improve industrial inspections. It’s also enhancing computer vision for security, allowing for quick activity detection.

| Metric | Description |

|---|---|

| Compute Density | The number of computing elements (neurons, synapses) per unit area or volume. |

| Energy Efficiency | The ratio of computational work performed to the energy consumed. |

| Compute Accuracy | The precision and reliability of the computing elements in producing accurate results. |

| On-chip Learning | The ability of the neuromorphic system to adapt and learn directly on the hardware, without the need for external training. |

Neuromorphic computing is promising but faces challenges. Scaling up is hard, limiting complex tasks. It’s also not as powerful as traditional computers. High costs and lack of standard metrics are also hurdles.

Despite these hurdles, neuromorphic computing is advancing. It’s set to bring more automation and smarter systems to many fields. As it grows, it will likely improve human-machine interaction and efficiency.

Memristor Technology and Synaptic Computing

Memristor technology is key in neuromorphic computing. It combines memory and processing, like the brain does. This makes computing more efficient.

Memristors act like artificial synapses. They help overcome old computing limits, saving energy and boosting intelligence.

Memory-Processing Integration

Memristors with different properties are needed for neuromorphic computing. They help make systems smaller and use less power. An artificial synapse has been made that learns like the brain, making it great for new computing devices.

Adaptive Learning Capabilities

The multi-memristive synaptic concept improves synapse performance. It allows for learning and updates in a balanced way. This method has been tested and works well for recognizing handwritten digits.

Neuromorphic computing is crucial for saving energy, as data centers will soon use a lot of power. The brain uses just 20 watts, showing big energy savings are possible. Memristors have also shown high accuracy in recognizing handwritten images.

Event-Based Sensors and Vision Systems

In the world of neuromorphic computing, event-based sensors and vision systems are changing how we process visual information. They work differently than traditional computer vision, which looks at each frame separately. Instead, these systems watch for changes in what we see over time, just like our eyes do.

This method makes visual processing faster and more accurate. It’s perfect for things like self-driving cars and robots.

Event-based sensors, or neuromorphic vision sensors, capture visual info in a new way. Each sensor has two photodiodes that send out signals when light changes. This lets them respond to changes in light as quickly as 5 microseconds.

These sensors are not just fast. They can handle very bright and very dark scenes, with a dynamic range over 120 dB. They also use very little power, often less than 10 mW. This makes them great for devices that need to move around or work on their own.

Companies like Prophesee are leading the way with systems like Metavision. It can sample over 1 kHz and uses as little as 2 mW. This means it can handle a lot less data than old systems but still gives great visual quality. This makes it even more energy-efficient and powerful.

| Technology | Key Specifications |

|---|---|

| AER Logarithmic Temporal Derivative Silicon Retina | 64×64 resolution |

| Artificial Visual Neuron | Multiplexed rate and time-to-first-spike coding |

| Vision Sensor | 128×128 resolution, 120 dB dynamic range, 15 µs latency |

| SpiNNaker System | Neuromorphic architecture |

| TrueNorth | 1 million neuron programmable neurosynaptic chip, 65 mW power consumption |

| Loihi | Neuromorphic manycore processor with on-chip learning |

As neuromorphic computing grows, event-based sensors and vision systems will be key. They offer unmatched efficiency and performance. They also help us understand how machines can see and understand the world like we do.

Challenges in Neuromorphic Engineering

The field of neuromorphic computing is growing, but it faces many challenges. These issues are in both hardware and software, showing how complex this technology is. It needs to be solved to reach its full potential.

Hardware Implementation Hurdles

Creating hardware that works like the brain is a big challenge. We still don’t fully understand the brain, making it hard to build electronic systems that mimic it. The brain’s structure is complex, and it’s hard to copy this in traditional computers.

The brain is very efficient with energy, using only about 20 W. Traditional computers use much more. Making neuromorphic hardware as energy-efficient as the brain is a big task. It needs new materials, better circuit designs, and ways to manage power.

Software Development Complexities

Writing software for neuromorphic systems is also tough. Traditional computing methods don’t work well with neuromorphic systems’ unique way of processing information. We need new ways to program these systems, which is a big challenge.

Neuromorphic engineering requires knowledge from many fields, like neuroscience and computer science. Combining these areas is hard. It’s important to find ways to work together for successful neuromorphic computing.

To move forward in neuromorphic computing, we must solve these hardware and software problems. Creating systems that are efficient with energy and can learn like the brain is very promising. It could lead to better data centers and artificial intelligence.

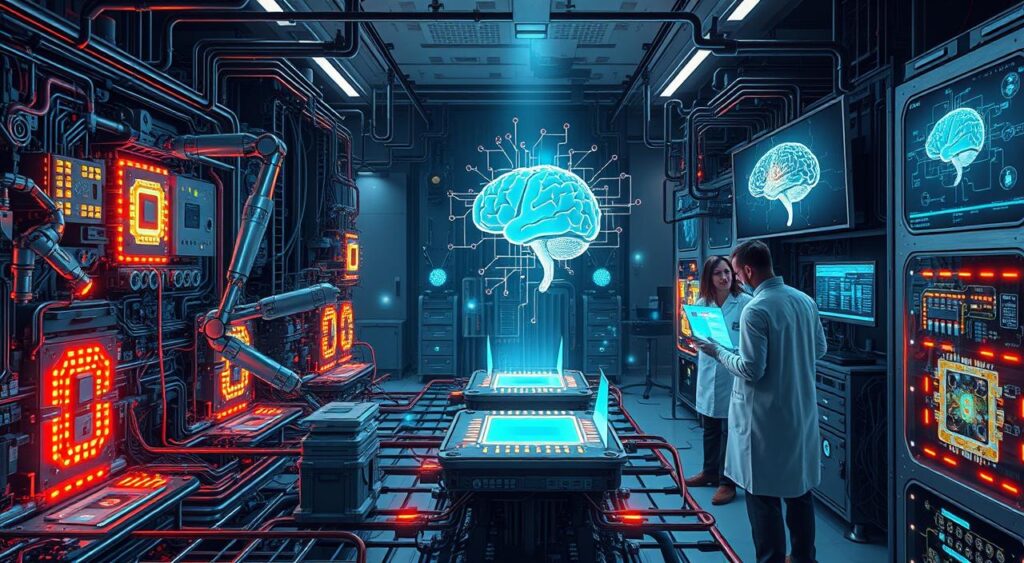

Industry Leaders and Research Initiatives

The world of neuromorphic computing is buzzing with activity. Major tech companies and research institutions are pouring money into this field. The Neuromorphic Commons Hub, THOR, at the University of Texas San Antonio, is leading the charge with a $4 million grant from the National Science Foundation. They aim to create open-source software, host workshops, and set benchmarks for the community.

Cornell Tech has started a new graduate course in neuromorphic computing. Jae-sun Seo, an associate professor, is teaching it. BrainChip is also training students in AI engineering with its Akida™ IP technology. This technology uses less power than traditional neural network accelerators.

The neuromorphic computing market is worth $47 million now and could hit $1 billion by 2028. The U.S. Department of Defense is investing in this area too. The Center of Neuromorphic Computing under Extreme Environments (CONCRETE) is a $5 million project. It’s a partnership between the Air Force and the Rochester Institute of Technology to create devices for extreme environments.

These efforts are crucial as we need more energy-efficient and high-performance AI processing. The work of these leaders and initiatives is shaping the future of neuromorphic computing.

Comparing Traditional AI with Neuromorphic Systems

Exploring AI and computing, we see big differences between traditional AI and neuromorphic systems. Traditional AI, based on the von Neumann architecture, finds it hard with tasks that are unclear or need to learn quickly. On the other hand, neuromorphic systems, inspired by the brain, are great at recognizing patterns, finding oddities, and learning on their own.

Processing Architecture Differences

Traditional AI uses algorithms and rules to handle information. Neuromorphic computing, however, uses hardware that looks and works like the human brain. This brain-like design lets neuromorphic systems process info more naturally and quickly, using less power for tasks like image and speech recognition.

Performance Metrics

Neuromorphic computing outshines traditional systems in many ways. It uses much less power. It can also do many things at once, like the brain, making it faster and more energy-efficient. IBM’s TrueNorth and Intel’s Loihi are examples of chips that show these benefits.

But, neuromorphic computing also has its hurdles, like complex design and programming, lack of standardization, and integration problems. Still, research is working to solve these issues and make this technology more accessible and widely used.

The world is changing fast with AI and computing. The contrast between traditional AI and neuromorphic systems shows the power of brain-inspired designs. By grasping these key differences, we can open up new areas in healthcare, robotics, and finance. This could lead to a future where AI and human thinking blend in amazing ways.

Future Applications and Market Potential

The neuromorphic market is expected to grow a lot, reaching $1.78 billion by 2025. This technology is going to change many areas, like AI-enhanced smartphones, IoT devices, autonomous vehicles, and space exploration.

In healthcare, neuromorphic chips could make medical sensors smarter and more adaptable. They also have big potential in defense, manufacturing, and robotics, changing how these fields work.

The neuromorphic computing market is expected to grow a lot, from $28.5 million in 2024 to $1,325.2 million by 2030. This growth is because of its use in cars, space, and the industrial sector.

New trends in neuromorphic tech include hybrid systems, edge AI, quantum computing, explainable AI, and brain-computer interfaces. Companies like Intel, IBM, Qualcomm, and Samsung are leading this change.

Neuromorphic AI is great at handling data, saving energy, and making quick decisions. As it keeps improving, the AI future looks very promising. It will bring new ways to live, work, and explore.

Integration with Emerging Technologies

Neuromorphic computing is inspired by the brain and works in a unique way. It’s set to work well with new technologies. This mix opens up new chances to make things better and bring new ideas to many fields.

Brain-machine interfaces are one area where neuromorphic chips can make a big difference. They could help people who are paralyzed move again by controlling robots with their minds. In robotics, these chips will give machines smart AI without using a lot of power, which is key for robots that work on their own.

Neuromorphic tech is also good for making augmented reality (AR) better. It makes AR more real and quick to respond, mixing the digital and real worlds better. Plus, it will make prosthetics work better, letting people control them more naturally and get better feedback.

As neuromorphic engineering grows, it will work well with other new tech like spintronics and quantum computing. This mix of brain-like processing, new materials, and advanced computing will lead to big changes in many areas. This includes healthcare, robotics, and even defense.

By using neuromorphic computing with new tech, companies and scientists can explore new ideas. This helps them improve and stay ahead in a world that’s always changing.

Conclusion

The future of computing is all about neuromorphic technology. It’s like the human brain but for machines. This new way of computing could change how we think about artificial intelligence and how we process information.

Neuromorphic computing is getting better and better. It’s becoming faster, more efficient, and adaptable. New technologies like spiking neural networks and memristors are making it possible.

This technology will change many areas, from computers to space travel. It will make AI work faster and smarter. It’s a big step towards making machines that think like us.

As neuromorphic computing grows, so do its possibilities. It’s shaping the future of how we interact with smart machines. Get ready for a world where machines think and act like us.